Toolkit For Staff

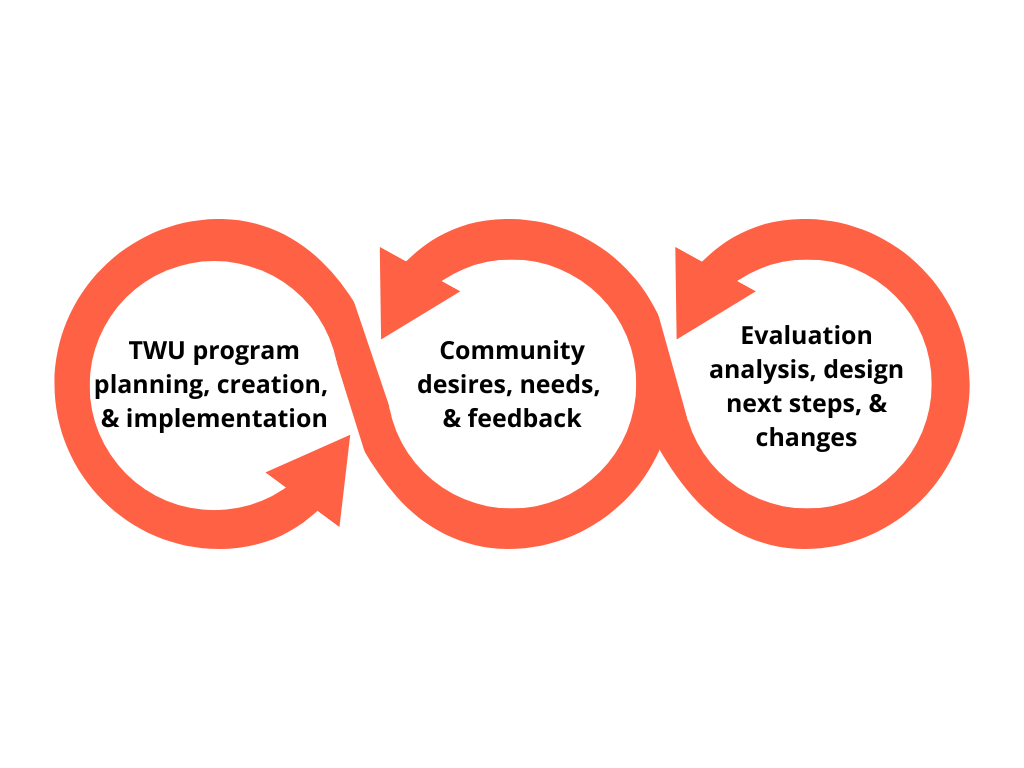

Cycles of reflection and renewal

Working directly with TWU staff, Amanda Flores of Florezca created this living toolkit to guide and resource internal evaluation efforts. The aim was to demystify the process and to create space for growth in practices over time and across programs. The toolkit includes information about the field of Indigenous Evaluation as well as TWU-specific protocols, practices and resources. We hope this will be a site that can be updated continually and provide support to TWU staff long into the future.

Essential Questions

- How can staff document success, identify areas for growth, and make informed, meaningful and supportive changes?

- How do TWU frameworks enact knowledge creation within evaluation at TWU?

- How are evaluation processes a form of collective care for staff and participants that encourage continued growth individually and collectively?

- When and how do we Identify what to measure/how to measure/how to analyze + share with the community?

Overview of TWU Frameworks

- Relational-tivity

- 2 World Butterfly Model

- Opide

- MHBS

- Trauma Healing Rocks

- Corn Model

Indigenous Approaches to Evaluation

“We have always done evaluation in a very decolonized way based on trust.” –Deborah Mytube Shipman, Chickasaw Nation, quoted in “Building the Sacred: An Indigenous Evaluation Framework for Programs Serving Native Survivors of Violence”

As Indigenous communities we have always engaged in processes of evaluation to sustain our cultures, lifeways and homes. Below are some of the common threads + values among Indigenous tribes and communities. These were compiled from research into Indigenous evaluation methods and existent practices at Tewa Women United. A link to the resources can be found [here].

- Story/Story Sharing

- Building Deep Relationships + Cultivating Trust

- Intergenerational Insight: Perspective of Elders, Wisdomkeepers +Youth

- Strengths Based

- Connection to Land + Place

- Sovereignty–Personal + Tribal

- Non Linear

- Community Engaged

- Reciprocity + Knowledge Sharing

- Indigenous Data Sovereignty

- ?

How do these processes show up in your programs? How can they be highlighted and uplifted?

Example from “It’s Only New Because It Has Been Missing for so Long: Indigenous Evaluation Capacity Building” (Anderson, et al)

Designing the Evaluation Process

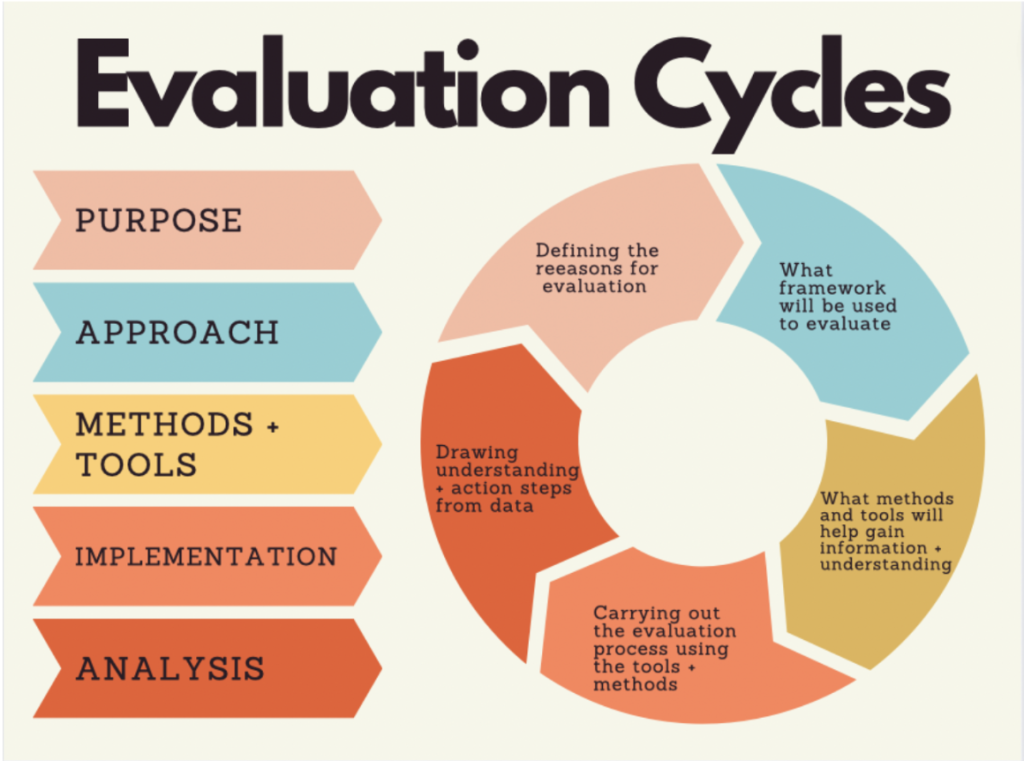

The evaluation cycle: catch the vision for indigenous evaluation.

Purpose

- What is the purpose of our evaluation?

- What is the goal of our evaluation?

Approach

- What information is needed to develop an understanding + direction?

- What is the intended direction that staff wants to move in?

- What information would staff like to gain from the evaluation process?

Methods and Tools

- What methods and tools are needed for the evaluation process?

- What learning and resources are needed to properly use these tools?

Implementation

- What processes of understanding and relating does this evaluation process require?

- What timeline and other documentation aids would be useful during implementation?

Analysis

- How do we make meaning in an inclusive way?

- How do we ground-truth our learnings?

- How do we share with community and co-create action steps?

More information on evaluation planning can be found on page 130 of The Kellogg Foundation’s Evaluation Toolkit.

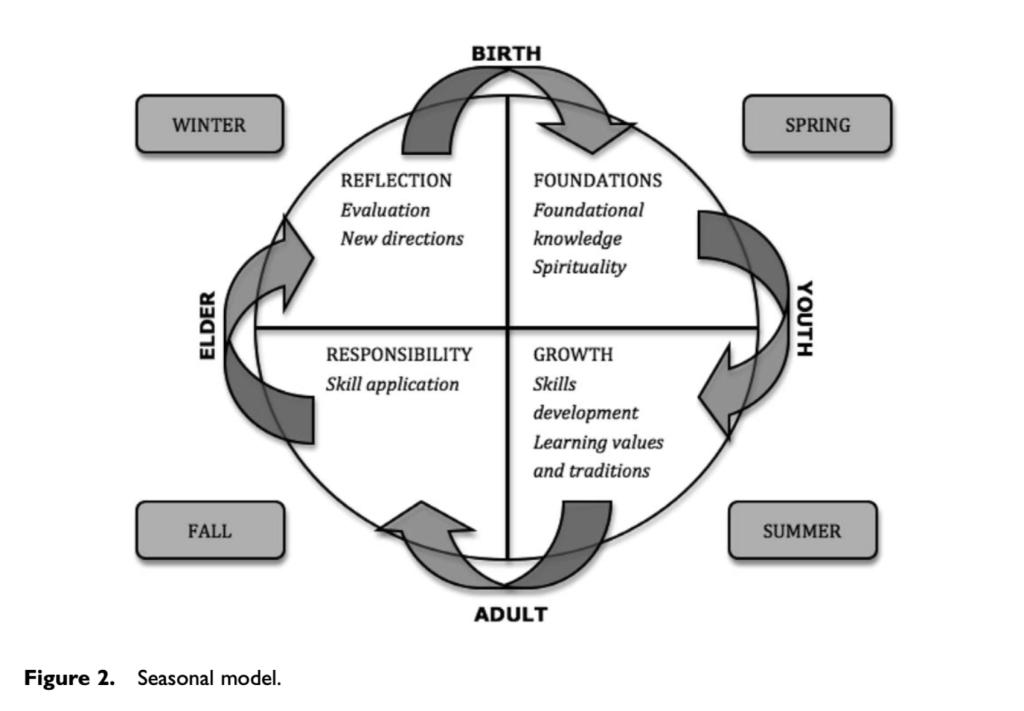

Timelines + Seasonal Planning

Through Tewa values and culture, TWU has always worked to align programs with seasons and life cycles. Honoring the non-linear process of reflection, creativity and emergent understanding, how can staff collectively work toward cycles of reflection + evaluation that is directed by the cyclical nature of life? Below are some questions for frequency of different evaluation tools and methods.

| Process | Timing |

|---|---|

| Designate time for regular reflection + evaluation periods;

Integrating Loving Accountability |

|

| Periodic analysis |

|

| Identifying action steps |

|

Example from “It’s Only New Because It Has Been Missing for so Long: Indigenous Evaluation Capacity Building” (Anderson, et al)

Evaluative Documentation

The Role of Documentation is to:

- Nurture the basis for staff collaboration, forward momentum and alignment of program processes.

- Tell the story of the programs, projects and communities that are a part of TWU.

- Understand the cycles of growth, reflection, impact within the life of programs/organization.

- Engage collective care for community by providing a way to capture and comprehend experiences (individually and collectively) and to share the documented impact and learnings.

“Evaluation, as storytelling, becomes both a way of understanding the content of our program as well as a methodology to learn from our story. By constantly reviewing our story, we enter into a spiral of reflection, learning from what we are doing and moving forward.”

-American Indian Higher Education Consortium Indigenous Evaluation Framework

1. Process Points- Documentation Baseline for TWU Wide Evaluation

| Program Design | Calendar | Pre Event Outreach | Attendance/ Sign In Sheets | Debrief/ Follow Up |

|---|---|---|---|---|

| Role/Purpose of Program and Intended Goals | Log events in calendar | Share info + materials with Maia | # of attendees | Individual |

| Intended Community Reach | Single event or recurring? | Share info w/program partners | demographics | TWU team

TWU all staff |

| Connection + Alignment w/program area focus/goals | Estimation of hours spent with program participants? | Share internally w/TWU programs | County | With the community |

| TWU Values + Frameworks explored | Choose time for debrief, documentation, | Share w/ community | Other information needed specific to program? | With the external evaluator |

2. Process Points–Documentation In Depth

| Eval Tools | Forms or Consent Needed? | When | Logged Where | Form/ Template Links | Next Steps Needed |

|---|---|---|---|---|---|

| Eval Baseline (Calendar, Sign Ins) | Log events in calendar | Share info + materials with Maia | |||

| Debriefs | Template for debriefs | At mid point and/or close of program cycles | |||

| Eval Questions/ Domains | Example from A’Gin | At start of program design | |||

| Photo/ Videos |

Consent Form | During programming upon the completion of consent forms | |||

| Surveys (Quantitative) | Sometimes requires a consent form;

Inform participants of anonymity and how data will be stored and used |

Pre or Post Surveys for programs | |||

| Informal Check in (Qualitative) | N/A | ||||

| Circles, Focus Groups or Interviews (Qualitative) |

Consent Form |

Overview of Tools/Methods for Data Collection + Documentation

| Tool | Purpose/Intention of Tool | How To Use |

|---|---|---|

| 4 Core Questions Debrief | Bring awareness and understanding to the wholeness of experiences– from what went well and what we are learning | Where is the joy?

What challenges are helping us grow? What did you learn? What did you love? |

| Archiving | A system to maintain databases of data and previous documentation + evaluation findings | Maintenance of program records, participant information and program history.

Contributes to a body of TWU specific data and and longitudinal data on program impact. |

| Communication Logs/Team Journals | A log of communication between program partners or participants; a journal to maintain notes which can serve to track program formation and progress | Template Links

|

| Consent + Confidentiality Practices | Respecting privacy of participants through obtaining consent for interviews and use of information in evaluation process; maintaining the confidentiality of information shared both written and verbally. | Links to:

|

| Creative Assessments | Collecting data and documenting programs through art, music, video, etc to capture the uniqueness of culture, identities, values and stories | To provide multiple modes of engaging with data that creates space for deeper expression |

| Debrief Meetings + Inquiry Questions |

|

|

| Formal + Informal Check Ins | Frequent opportunities to keep communication channels open, show care, raise questions or concerns | Communicating with staff, external partners, funders to listen to:

|

| Interviews | 1:1 conversations with program participants, partners etc to honor relational-tivity and create safe and open spaces to connect deeply and share learning, feedback, insights | Record w/permission, transcribe and archive |

| Other project documentation | ||

| Plus/Delta | A tool to use in meetings or debriefs to understand what went well, what was learned, what needs to change | To provide information on a more frequent basis about program needs, changes and insights learned |

| Reports | To capture, summarize and present data

To share with communities and partners as part of a feedback loop |

To gather data after the period of review and analysis into a coherent document that can make meaning and be shared with community, staff, funders, etc |

| Sign In Sheets +Attendance Logs | Tracking participation, who is attending meetings and how many are in attendance | Template Links

|

| Story Mapping | Mapping how stories are formed, the narrative, dreams, intended outcomes and key collaborators + participants | Link to story mapping tool. |

| Surveys + Questionnaires | A series of questions designed to develop understanding around themes + areas of inquiry | Used to gather qualitative and quantitative data |

| Talking Circles + Focus Groups | Group conversations based around connection and inquiry into experiences of TWU programs, community needs, etc. | To build and strengthen community relationships, gather qualitative data on TWU and programs |

| Timelines | Tracking project timelines in order to meet goals, meet community needs with consideration and to consider whole life cycle of projects and how to evaluate them within those cycles | To create a flow of events and key points for gathering data, outreach, events, etc |

Technology Tools for Data Collection

Each documentation and evaluation process is tied into at least one the online platforms below. To best keep track of data, photos, and reports, work with your program team to choose which platforms will best support your record keeping based on your evaluation needs.

| Technology | Purpose + Suggestions |

|---|---|

| Otter AI |

|

| Google Suite |

|

| Monday.com |

|

| Photo Voice |

|

| Gantt Charts |

|

| Spreadsheets |

|

Analysis: Creating Shared Understanding

For comprehensive suggestions on data analysis please refer to page 166 of the Kellogg Foundation Evaluation Toolkit.

- Periodic Analysis–Determine timelines of analyzing data.

- Qualitative Analysis– Qualitative analysis includes reviewing, coding and analyzing information from interviews, story circles and creative assessments. The overview in the Kellogg Evaluation Toolkit provides an information on how to code data, generate themes and generate questions to interpret the findings.

- Quantitative Analysis–Quantitative analysis includes reviewing data from sign in sheets, surveys, and other sources to review information as it relates to evaluation questions. The overview in the Kellogg Evaluation Toolkit provides tools to perform statistical analysis process with the numbers collected from data sources.

- Inclusive process-It’s important to develop creative ways to include everyone who is affected by the evaluation–staff, community, partners, etc. The lens with which you analyze is greatly affected by your lived experience.

Reciprocity: Sharing Data with Community

The aim of sharing data with community is making the information shared by participants available for their review, continue story sharing and continued sense of connection to TWU programs. There are many avenues to develop ongoing avenues of reciprocity through data sharing.

Feedback Loops

- Ideas for built-in sharing with community: informal check ins during programs, social media questions + polls, creative visioning sessions to brainstorm with community, etc.

- Create an opportunity to share evaluation findings with community in service to transparency, mutual growth and resource sharing.

Scenario example:

Host a gathering of program participants with a nourishing meal provided in one of TWU’s community spaces (the new property, healing foods oasis or another meaningful place of connection).

Provide multiple modalities for participants to engage with the data–e.g. Infographics, narrative reports, film, photography, artistic renderings, audio.

The key is to make the information accessible, easy to digest, and engaging to a wide range of learning styles

- Ways to Share

Written and visual reports made available online or at TWU

Interactive presentations with communities

Sharing with program participants 1:1 or in small groups

How else?

- Timing of Sharing

Check ins + formative assessments as programs progress

Sharing key findings + themes from completed evaluation processes

Determine at what points in programmatic calendar to check in and/or share back with community/participants

Glossary of Terms

Discussing these terms in small groups or by program area can help co-create a practical understanding of evaluation the TWU way

- Indigenous Evaluation

- Indigenous Data Sovereignty

- Storysharing

- Strengths based

- Capacity building

- Assessment

- Methodology

- Framework

- Culturally Responsive Evaluation

- Formative Assessment

- Summative Assessment

- Developmental Eval/Assessment

- Mixed Methods

- Evaluation Approach

- Evaluation Philosophy

- Data Gathering/Collection

- Metaphor based practices

- Quantitative

- Qualitative

- Theory of Change

- Performance Measures

- Program Fidelity

- Metrics

- Analysis

Appendix

- Examples of Tools + Evaluation cycles from scholarly articles

- TWU’s bibliography of resources related to Indigenous Evaluation